Several years ago I read Jerry P. King’s The Art of Mathematics (1992). Chapter 3 deals with Numbers, and in it is a statement that has bothered me ever since “Although they are the most fundamental of mathematical objects, the natural numbers are not found in nature.” There are real numbers, but none exist in the natural universe. We may count two people, write the number on a piece of paper, or solve an equation that gives the answer as two, but the number ‘two’ does not exist – we cannot pick it up or put it under a microscope. I have kept an eye out for ‘one’, but even this basic singularity is elusive. Numbers, it seems, are an abstraction.

So where does this leave ‘zero’? Zero means nothing, zilch, emptiness; so is it even a natural number – is it an integer? Several commentators of mathematics and science have suggested that the invention of ‘zero’ was as important as that of the wheel. Our system of numbering depends on it. One becomes 100 by adding a couple of zeros. It is essential to calculus and algebra, and for solving equations. Without zero, there would be no binary code, and no computers (at least as we know them).

In our numbering system, zero is a place holder; it occupies the place where no integer occurs. So in the number 1001, the zeros indicate that there are no 10s or 100s integers; without the 0s we would have the number 11. Our place value system is constructed around a base of 10, usually referred to as base 10. This means that every time we add an integer to a number, the place value column increases by a factor of 10; 1 becomes 10, becomes 100, becomes 1001, and so on. The place value numbering system was probably invented by the Babylonians about 2000 BC. Their numbering system also used a base 60, that was inherited from the Sumerians between 2000 and 3000 BC. However, they had no zero, or any kind of symbol that might represent zero, until later when they introduced spaces between digits to indicate ‘nothing’. In other words, it wasn’t a number.

Zero, as a number, was probably invented in India in the first few centuries AD. Until recently, the oldest known formulation was recorded on a tablet that describes a 9th Century Hindu temple, in the old fortified city of Gwalior. The zeros tĺhere are dots, but this notation shows that the base 10 place-value numbering system was probably commonplace by 876 AD.

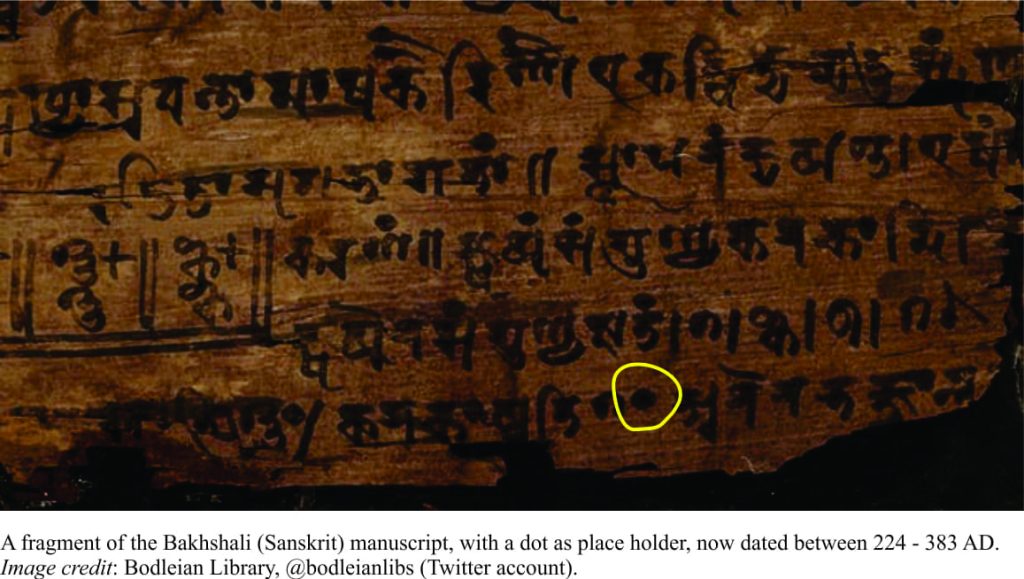

The dot notation is also found on an ancient birch-bark manuscript – the Bakhshali manuscript, discovered in 1881 (in what is now Pakistan), of which 70 fragments remain. The manuscript is held at the Bodleian Library, Oxford University. There has been significant debate about its age, but what is now generally conceded is that it is a copy of a much older manuscript. Until recently, it was considered to be 8th century, with some scholars placing it as early as 3rd or 4th century. It is a mathematical tome of remarkable sophistication, that contains examples of linear and quadratic equations, formulae for square roots and other functions, and arithmetic and geometric progressions, all of which use zero (dots) as a place holder. Carbon dating of the bark (reported September 14, 2017) now shows that the manuscript is indeed 3rd or 4th century, with the oldest pages between 224-383 AD.

The first definition of zero as an actual number was made in 628 AD by Hindu astronomer and mathematician Brahmagupta. The work, known as Brahma-sphuta-siddhanta, outlines how zero is derived by subtracting a number from itself. This was a seminal work, not only in astronomy, but in mathematics; its translation into Arabic about 771 AD, was probably an important contribution to the Arabic invention of algebra.

Zero, in the form of a dot, had evolved as place-holder and as an actual number in India by the 7th Century. By some circuitous route, Persian translations of Sanskrit manuscripts provided a basis for Arab developments in mathematics. The first record of zero being represented as an enclosed symbol, a circle (or oval, with nothing inside it) in 976 AD, was in the writings of one Muḥammad ibn Mūsā al-Khwārizmī, a Persian mathematician; it was given the name Sifr (Arabic for ‘empty’). Not long after, Arabic numerals, that now included ‘0’, reached Europe. Leonardo of Pisa, otherwise known as Fibonacci, was instrumental in advertising the utility of the numeral system in his 1202 composition Liber Abaci (Book of Calculation) – almost 600 years after Brahmagupta’s opus.

According to the Merriam-Webster Dictionary, the word ‘zero’ was first used in English in 1598, but I have yet to find the context for this. The etymology of ‘zero’ mimics the history of its formulation, beginning with the Arabic sifr, that on arriving in Europe, perhaps through Italy (zero) and France (zéro), finally entered the English language as zero.

Hold a ‘0’ to the light, and look through it – there is nothing, and everything. Look through the zero to new worlds, new views of the universe. The history of zero has done just that, from tentative, humble beginnings it now helps us create so many possibilities. I wrote this piece 2 days after Cassini’s final descent into Saturn’s atmosphere. I wonder if such flights would have been possible if not for the genius of zero?

Check out this YouTube video about the Bakhshali manuscript (Bodleian Library)

Here’s a couple of books on the topic:

The Nothing that is: A natural history of zero, Robert Kaplan, Oxford University Press, 2000

Zero: The biography of a dangerous idea. Charles Seif. Penguin, 2000

Pingback: Free Piano